System Architectures and Load balancers

HLD Day3

Section 1: Architectures

The art of building sustainable systems is called system architecture.

Three different types of architectures when it comes to designing a system are:

Monolithic architecture

Service Oriented Architecture

Microservices

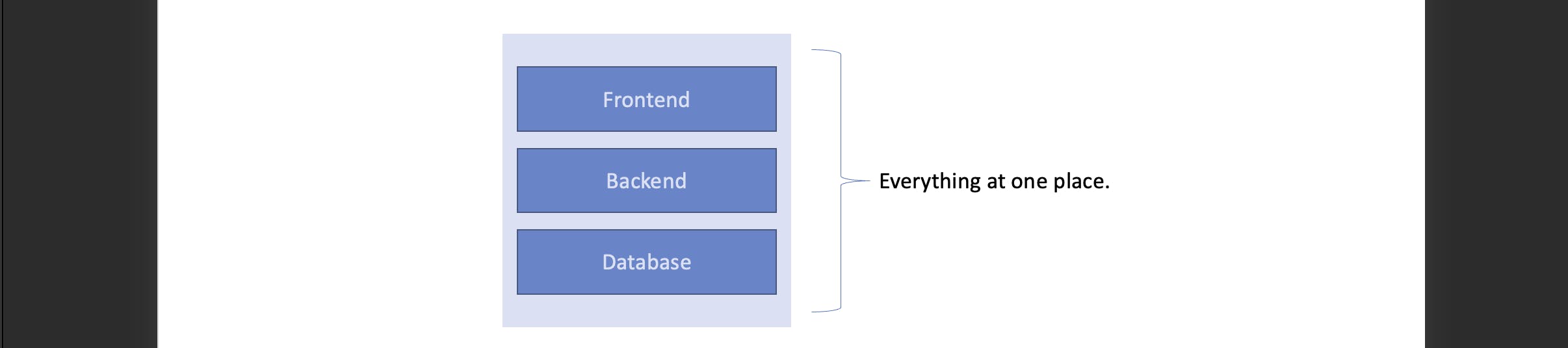

Monolithic systems:

A system where the frontend, backend and database all are in one place bundled together.

When to use a monolithic system?

When you are just getting started.

When do not wish to exert much cost just yet.

When you are not sure of the future of the application.

Some advantages of using a monolithic system.

Since everything is in one place the system becomes latency-sensitive.

It becomes easy and less expensive to manage security.

Comparatively, it makes it easier to test the system.

An additional factor is that, since there isn't much complexity in the system, we have less confused developers which in turn increases productivity.

Scaling in Monolithic systems:

Here scaling is complex, as you cannot do selective scaling, you have to scale the whole system at once.

- For instance, we have read heavy system, for that only the frontend needs to be scaled, but since the whole frontend, backend and database are bundled together, we cannot do selective scaling of the frontend only, we will have to scale all three together.

Due to tight coupling, the scaling becomes less feasible and slows the time to production.

Since we are scaling the whole system, we are forced to use the same stack and the window for exploring other stacks becomes short.

The time to deploy another system is high as it needs all the components.

Note: If the system is not made highly available (distributed), this becomes our single point of failure.

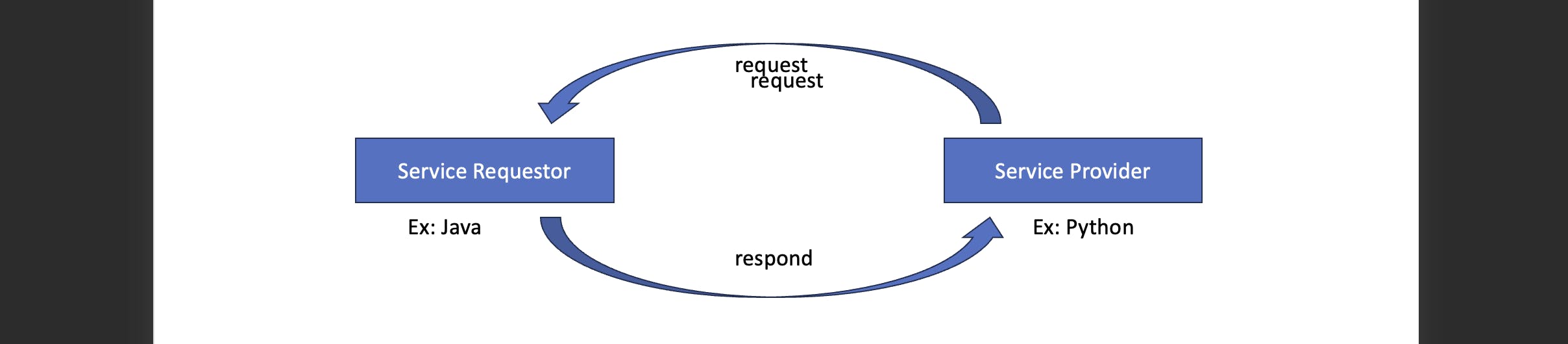

Service Oriented Architecture (S.O.A):

This type of architecture is based on two components basically, service requestor and service provider, both of which can be in different stacks.

Note: These systems must be granular (distinct), Multiple systems for each service will be created.

What are some of the advantages of a service-oriented architecture?

We can perform selective scaling in a system where it needs to be scaled.

The dependency of using the same tech-stack is removed here and we can work with multiple tech-stacks.

The systems are loosely coupled, which increases flexibility and reduces time to production.

We are making a change in one system It won't be affecting the second system.

Deployment time decreases significantly.

There is no single point of failure.

What are some of the challenges of a service-oriented architecture?

Latency:

- Since all the system connections are over a network, we have a large number of network connections, hence latency is faced.

Security:

Since there are a lot more systems, their security becomes complex.

More money will be required to secure all the systems, hence it becomes expensive as well.

Testing:

- Testing of such a large system is also complex.

Confused developers:

- With many systems, we are using multiple tech stacks and logic, making it difficult for the next person to understand all of it easily.

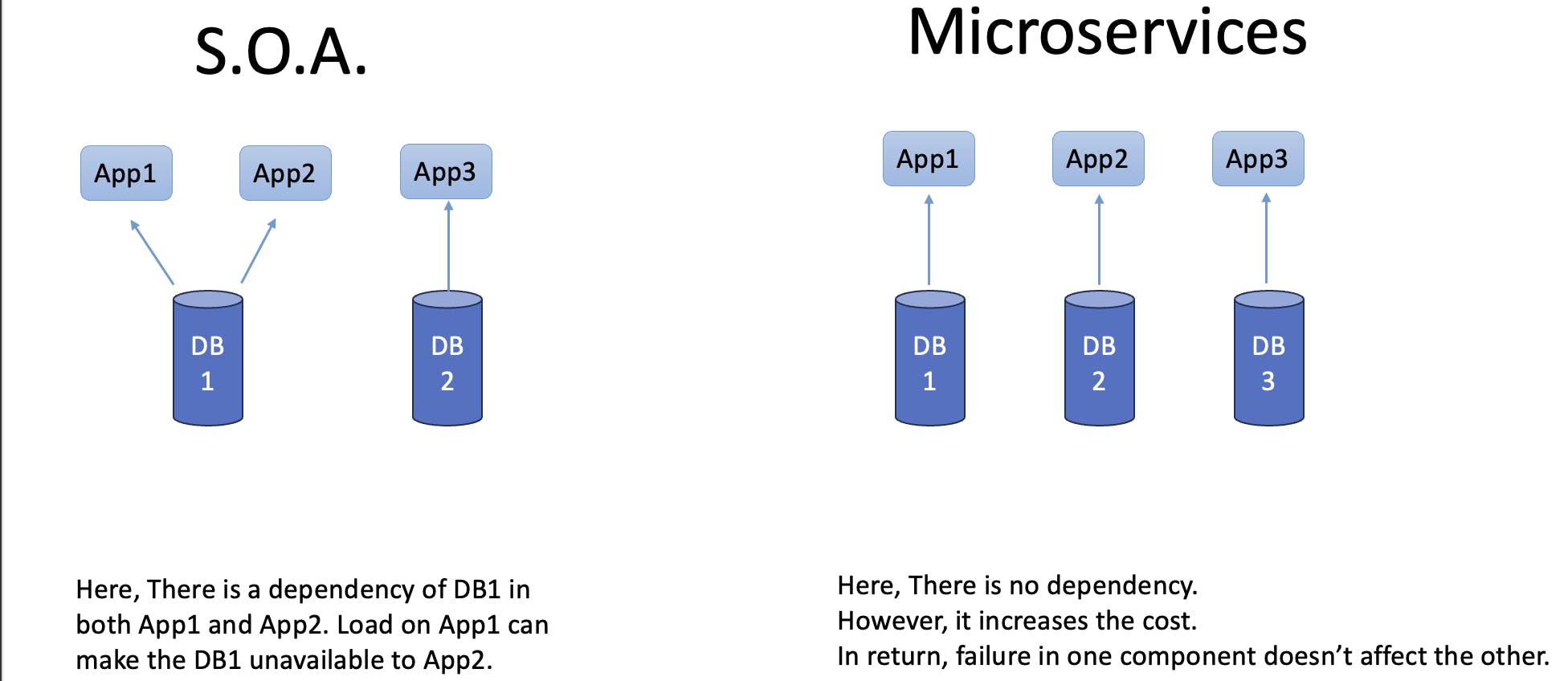

Microservices:

Microservices are nothing but a more strict version of service-oriented architecture.

Note: A component should be able to communicate with another component, but it shouldn't bring the system by overloading any other component of it.

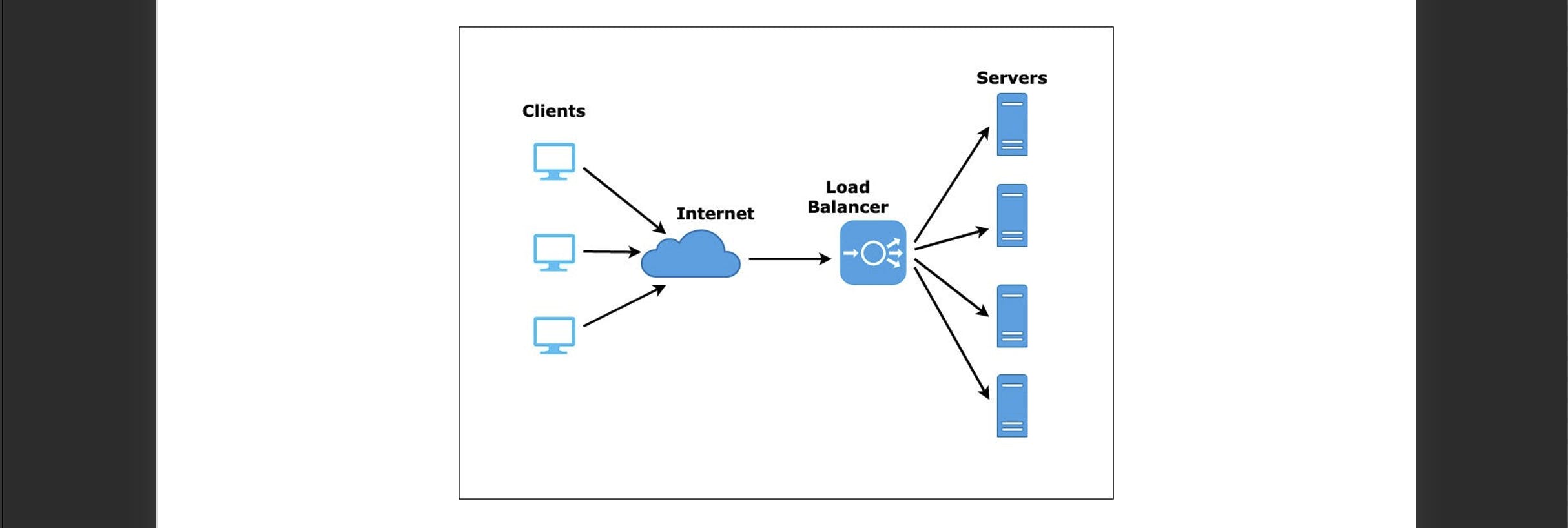

Section 2: Load balancers

Load balancers are devices or services that are used to distribute loads across different servers in order to decrease the load on each server.

Note: It is a good practice to keep two load balancers, one active and one passive, so that in case the active one goes down the passive one can take up the work of distributing the load.

Types of Load Balancers:

We can distribute load balancers as well in two categories.

Hardware load balancers

Software load balancers.

Hardware load balancers:

These are physical servers present at a physical location.

It is expensive compared to software ones.

Since they are physical servers it is easier to have more security on them.

We can have dedicated resources.

It can handle more load than a software load balancer.

Used by large organisations like AWS, Azure, GCP etc.

Software load balancers:

We have more flexibility in the configuration of a software load balancer.

We have resources that are shared (VMs/DockerImage).

We can do more customizations in it.

It's cost-effective.

Used in AWS, Azure, GCP etc. services while configuring servers.

OSI Level Load Balancers:

Network Layer:

- Traffic is segregated based on the IPs for different servers.

Application Layer:

- Traffic is segregated based on the data to different servers.

Algorithms in Load balancing:

Round Robin Algorithm:

- Imagine you have a bunch of servers, and you want to be fair. Round Robin gives each server an equal chance to help out. It's like taking turns – everyone gets a fair shot.

Least Connection:

- Think of it as picking the server with the shortest line. If a server has the fewest number of people waiting (connections), you go there. It's all about finding the quickest way to get your request answered.

Least response time:

- This one is about speed. Your request goes to the server that can finish the job in the least amount of time. It's like choosing the fastest cashier at the supermarket.

Sticky algorithm:

- Imagine you always want to talk to the same person. Sticky Algorithm makes sure that your requests always go to a specific server. It's like having a favourite go-to person.

Weighted Round Robin:

- Here, we consider the strength of each server. The stronger (more powerful) servers get more work because they can handle it. It's like assigning more tasks to the stronger workers in a team.

Additional Information:

What is client and server?

Client: A client refers to any entity or device that initiates a request for data or services from another entity, known as the server. Clients can take various forms, such as web browsers, mobile apps, or devices seeking information or functionality from a server.

Server: A server is a persistent and continuously running process on a computer designed to handle incoming requests and provide corresponding responses. Servers are dedicated to serving clients, managing resources, and executing tasks as requested. They play a pivotal role in facilitating communication and data exchange in networked environments.

What is Throughput?

Throughput is a measure of work accomplished within a specific timeframe. In computing, it is often used to gauge the rate at which a system processes or handles tasks.

Example: Consider an application that receives 500 requests within a 10-second window.

Advantages of throughput:

Performance Measurement: Throughput helps assess the efficiency and effectiveness of a system by quantifying the work completed over time.

Capacity Planning: Facilitates capacity planning by predicting system performance under different loads, aiding in preventing potential overloads.

Bottleneck Identification: Throughput is instrumental in identifying bottlenecks – points in a system where the flow of data is constrained, allowing for targeted optimizations.

How to Improve throughput:

Scaling: Increase system capacity by scaling resources, such as adding more servers or resources, to handle a larger volume of requests concurrently.

Code Optimization: Enhance efficiency by optimizing code to reduce execution time, making the system more responsive and capable of handling more requests.

Caching/Compression: Implement caching mechanisms and data compression techniques to reduce the amount of data transferred, improving response times.

Database Optimization: Optimize database queries, indexes, and overall structure to minimize response times and enhance data retrieval efficiency.

Network Optimization: Fine-tune network configurations and protocols to ensure efficient data transfer and minimize latency.

Parallel Processing/Multithreading: Utilize parallel processing and multithreading techniques to execute multiple tasks simultaneously, distributing the workload and improving overall system performance.

Thanks for coming this far in the article, I Hope I was able to explain the concept well enough. If in any case, you face any issues please feel free to reach out to me, I'd be happy to help.

You can always connect to me over LinkedIn to share feedback or queries by clicking here -->> Connect with me on LinkedIn.