Distributed systems are a cluster of separated machines, where all the machines are connected over a network and they share data/resources in between them through that network. Generally they are managed by a central machine.

When to use a distributed system?

Let's assume we have a data processing system, with time the data being processed has grown beyond a point where this system cannot handle it. We can try to archive some data but we cannot keep that up for long as well and again we need to unarchive in case it is needed.

What we can do in this case is take another system and transfer the new load onto the new one and from there balance the load accordingly between the two systems. Dealing with the situation in this way is called scaling the system.

Scaling:

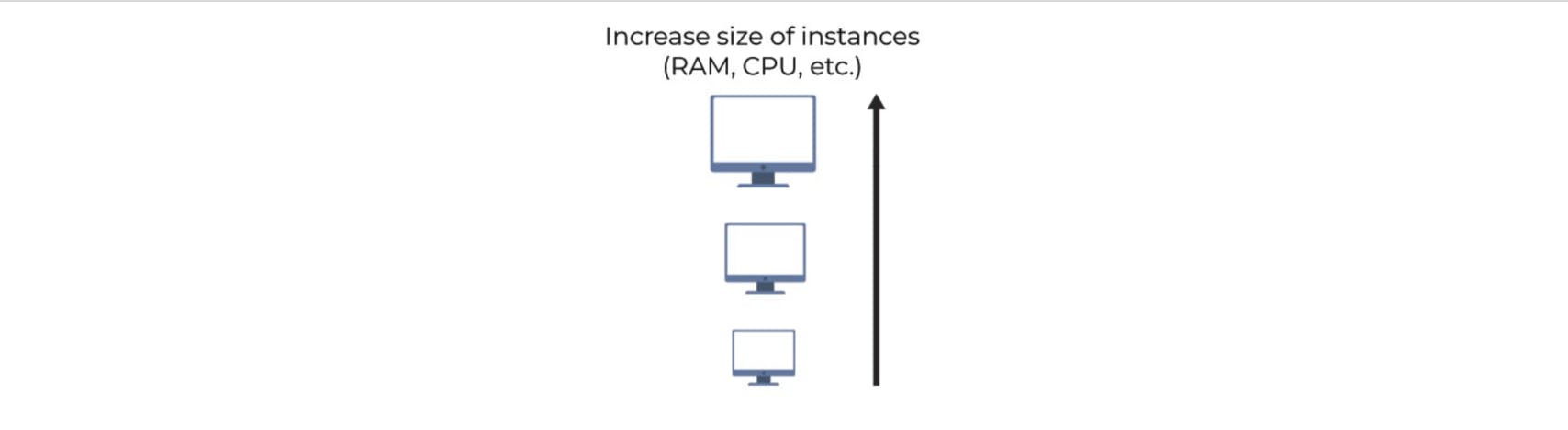

Vertical scaling:

Increasing/decreasing the computational power of the system is called vertical scaling. For example, adding more storage and CPU power to the same machine can handle the load better as per the requirement.

However, beyond a point, only the cost keeps Increasing and performance becomes stagnant.

As has been said, we should not put all our eggs in one basket in this case as well since we are scaling just one system, if something happens to this system we will lose our whole data.

Distributed systems (Horizontal scaling):

Increasing/Decreasing the number of systems handling the load as per the requirement, all of which are connected over a network are distributed systems.

Although distributed systems are used widely now and your data is at multiple places making it less prone to data loss, the initial implementation of it ended in a lot of data loss now and then, the reasons for which were mainly hardware failures and unreliable networks.

How to fix the above?

The answer to this is replication, so that even when one system goes down or is not reachable we have the same data replicated in another machine. This way even if the failure were to occur, it was being handled gracefully.

Consistency(C):

A system is said to be consistent when we receive the same data to numerous requests sent at the same time in a distributed system.

While working with a distributed system, when a change is done in one machine it takes some Δt time to get replicated in all other machines as well, hence for that Δt time the system becomes inconsistent.

Since, with the help of replication the system is eventually consistent we call this type to system as eventually consistent system.

Note: Δt can be close to zero, however it cannot be zero in a distributed system.

Availability(A):

A system is said to be available in distributed systems if it is giving a response every time we make a call to it.

For a system to be highly available, we must keep the downtime as low as possible, that involves maintaining the system and fixing the issue in as minimum time as possible keeping the downtime as little as possible.

The availability of a system is often calculated using the following formula:

Partition Tolerance(P):

A system where even if the connection is broken down from one system to another system in a cluster of systems, we do not lose any part of our data is a partition-tolerant system.

While building a distributed system, we should build it in such a way that it is partition tolerant.

Note: General scenarios where system needs to be partition tolerant is in case of network failure and node failures.

Reliability:

Another important term here would be the reliability of a system. It refers to the ability of a system to perform it's task as expected each time a request is made to it.

Mathematically we would define reliability as:

Reliability = 1 - probability of failure

If we have have a highly reliable system, we can also say that we have a highly available system.

Vice-versa of the above may not be true, as as a highly available system might be getting fixed as soon as error come, but in that condition the reliability of the system will be compromised.

C.A.P. Theorem:

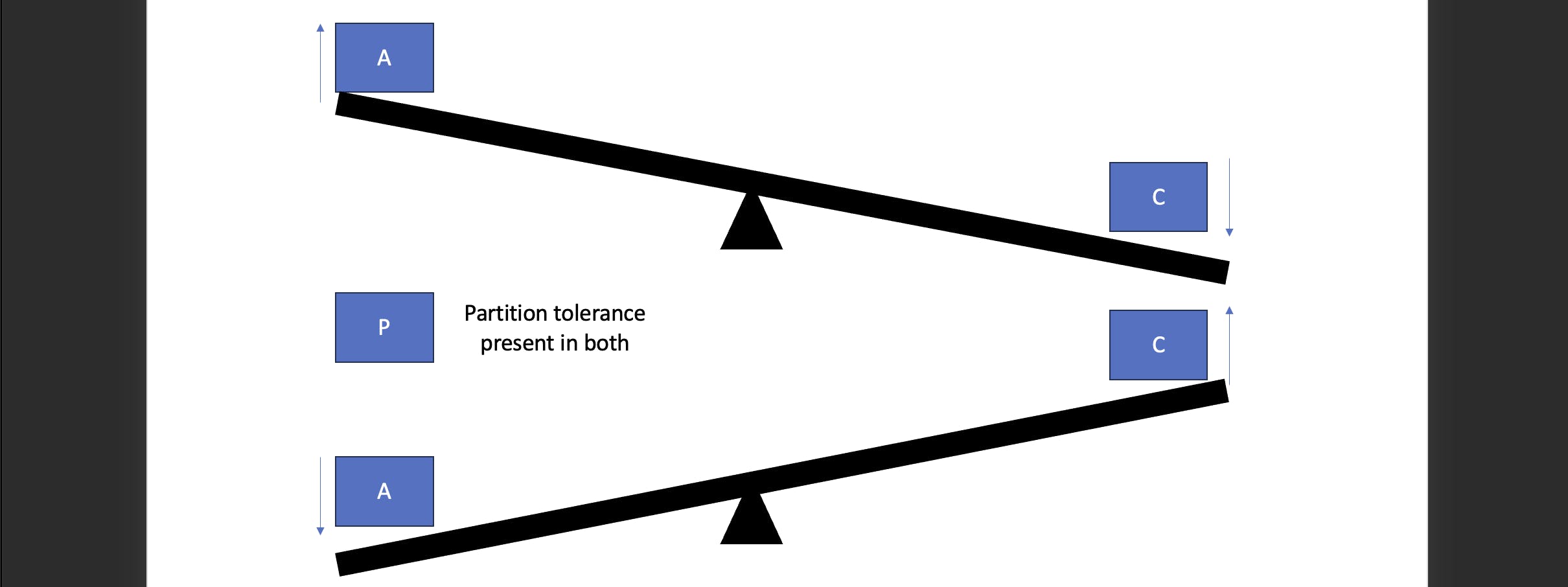

As per the C.A.P. theorem, we can only have two out these three at a time.

When we want to have high availability and partition tolerance we won't be able to maintain consistency the system can be eventually consistent.

Likewise if you wish your system to be consistent and portion tolerant you will have to compromise on the availability.

A system which is both consistent and availability is likely to be a vertically scaling system, in that case we don't maintain replication of system hence we don't have partition tolerance there.

To make a system highly consistent, we need to make the system unavailable for the duration in which the replication is being carried throughout the whole cluster.

Note: In a real world scenario, we cannot affort to lose any data hence partition tolerance is a must. Cloud platforms such Alibaba Cloud, AWS Cloud, Azure etc have already taken care of partition tolerance.

Example:

IRCTC

When the tickets for tatkal go live, the system becomes unavailable due to large number of traffic coming in and transactions for seats happening. But that unavailability is compensated by the consistency in payments and seat booking. No two people are given the same seats.

Facebook:

Facebook is an highly available application. For this application being unavailable for even slight duration is a lose on the dollar. Here user are okay with the experience even if the picture updated is few seconds older. We maintain eventual consistency and for creating a balance with high availability.

Thanks for coming this far in the article, I Hope I was able to explain the concept well enough. If in any case, you face any issues please feel free to reach out to me, I'd be happy to help.

You can always connect to me over LinkedIn to share feedback or queries by clicking here -->> Connect with me on LinkedIn.